A threat hunting program backed by the right metrics and proper documentation of hunts can reduce breach costs and, in the event of a breach, demonstrate operational maturity to insurers and regulators. Vanity metrics, like how many hunts are performed by an individual hunter, might look like good management but can lead the hunt program astray or drive talent away.

“Good” metrics help focus the program on efficient and effective hunt processes by tracking visibility gaps closed, detections generated, incidents escalated and vulnerabilities remediated. These key outputs from proactive hunts drive security operations center (SOC) efficiency and reduce mean-time-to-detection (MTTD) and mean-time-to-response (MTTR) — key metrics that show how quickly defenders discover and contain potential security incidents.

Over time, intelligence-driven proactive hunts — before alerts — paired with consistent reporting and metrics translate work into business outcomes that matter to leaders in security, finance and risk. This makes it vital for hunt teams to consistently track metrics and document findings and resolutions, which can contribute to reduced breach costs and improved cyber insurance. well-documented risk identification processes also help mitigate regulatory enforcement risks.

The best metrics help create a common understanding of threat hunt “success” across the organization, helping leaders make better decisions about investments in their people, processes and security and logging platforms, such as endpoint detection and response (EDR) and security information and event management (SIEM) platforms.

Good, Bad and Ugly Hunt Metrics

Metrics that show hunt effectiveness is not always obvious. As Will Thomas, an intelligence analyst at Team Cymru recently noted, “Finding that an entire category of edge devices is not being logged in the SIEM and is therefore unmonitored is a much bigger win than saying you triaged the results of 50 threat hunting queries in a given week.”

The rate of triaging might suggest efficiency in threat identification, but removing this blind spot should help address the increasingly popular tactic of exploiting unpatched VPN gateways and firewall appliances, which often don’t support EDR.

The UK government outlines several useful threat hunting metrics that matter to the stakeholders who use the metric for decision making, such as the CISO, threat intelligence teams, or hunt program leader.

Good metrics to demonstrate hunt outcomes before an alert:

- Number of incidents identified proactively (vs. after alerts)

- Number of vulnerabilities identified proactively (vs. after vulnerability assessments)

- Dwell time of proactively discovered incidents (vs. after alerts)

- Containment time of proactively discovered incidents (vs. after alerts)

- Effort per remediation of proactively discovered incidents

- Data types and coverage of estate — to show visibility gaps

- Hypotheses per MITRE ATT&CK tactic

- Hunts per MITRE ATT&CK tactic — to show hunt coverage of threats

These are a good starting point for measuring outcome, but it’s also important for teams to be aware of those that reward activity over impact. Kostas T, an independent security consultant, outlines how different hunt metrics can improve, mislead or harm security strategy in his post, Threat Hunting Metrics: The Good, The Bad and The Ugly. Good metrics show impact and effectiveness. For example, the “number of new detections proposed by the hunt team for threats undetected by other means” shows the hunt team’s direct contributions to new detections; new data points that reduce false positives or improve existing detection alerts connect directly to improved MTTD and MTTR. Completed hunting sessions within a given period to show activity and efficiency at a team level. Bad metrics can be distractions, such as the number of hunt reports generated, which don’t reflect the substance of reporting to stakeholders. Ugly metrics can harm the program, such as tracking how many hunts per hunter, which can risk burnout and drive higher staff turnover.

How Proactive Hunting, Metrics and Documentation Reduce Risk

- Regulators: Documentation of hunt activities shows technical and organizational measures to identify risk. If an incident occurs, robust hunt records and metrics can demonstrate due diligence and trend data showing efforts to improve mean-time-to-detection (MTTD) and mean-time-to-response (MTTR) metrics, which show how quickly defenders discover and contain potential security incidents.

- Insurers: Cyber insurers are emphasizing EDR. A proactive hunt program provides evidence of control validation, such as whether EDR logging and detections actually cover the organization’s risk priorities.

Case Example: How Hunt-Driven Control Validation Can Improve Tooling

In the 2023 breach of U.K.-based Capita PLC, the firm’s EDR generated a “high” priority alert within minutes of downloading a malicious file but did not escalate it to “critical” after detecting the associated download of the Qakbot infostealer malware. The misclassified alert contributed to the SOC not acting upon the alerts until 58 hours later. During that window, the attacker exfiltrated data affecting 6.6 million and deployed Black Basta ransomware. The delayed response contributed to a £14 million GDPR penalty by the U.K. Information Commissioner’s Office, which noted the EDR should have automatically escalated the alert to “critical” as soon as Qakbot was detected. (See our white paper The Black Basta Blueprint to view hunt packages we’ve developed to identify Black Basta techniques.)

The lesson: Cyber resilience regulations and cyber insurance policies often require EDR. However, EDR configurations and detection tuning impact alerting and response times. Qakbot, which emerged in 2007 and is still active, was known well before 2023 to deliver Black Basta, Conti and REvil ransomware. Proactive threat hunts paired with emulation and validation can verify detections fire properly and identify gaps in EDR endpoint logging. These hunts can also evaluate an EDR’s true level of visibility by running hunt packages that test telemetry across registry modifications events, network connections, DNS activity, file create activity—versus just process creation events commonly logged by EDR platforms. Documenting proactive control validation can provide evidence under regulatory scrutiny.

How HUNTER Tracks the Good Metrics and Mitigates the Bad

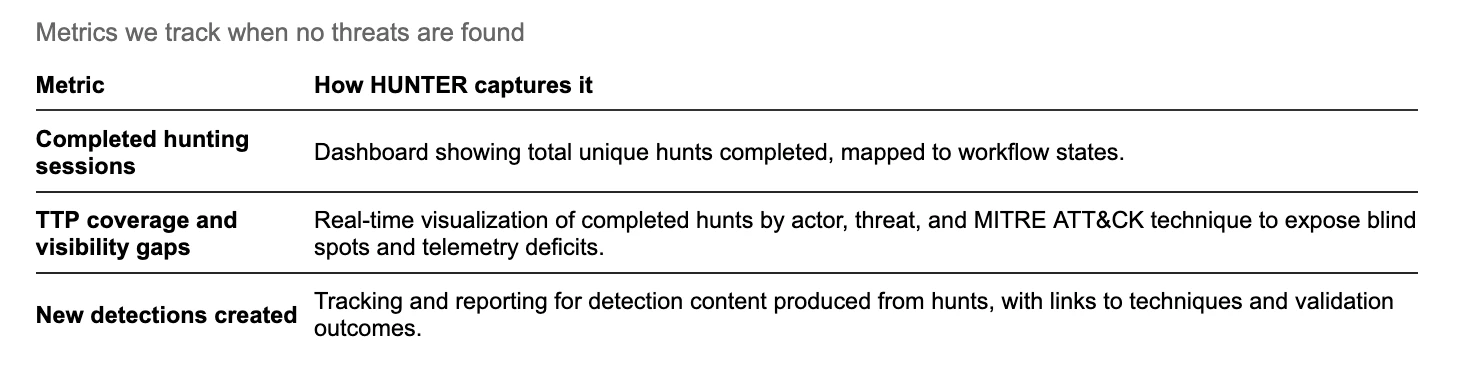

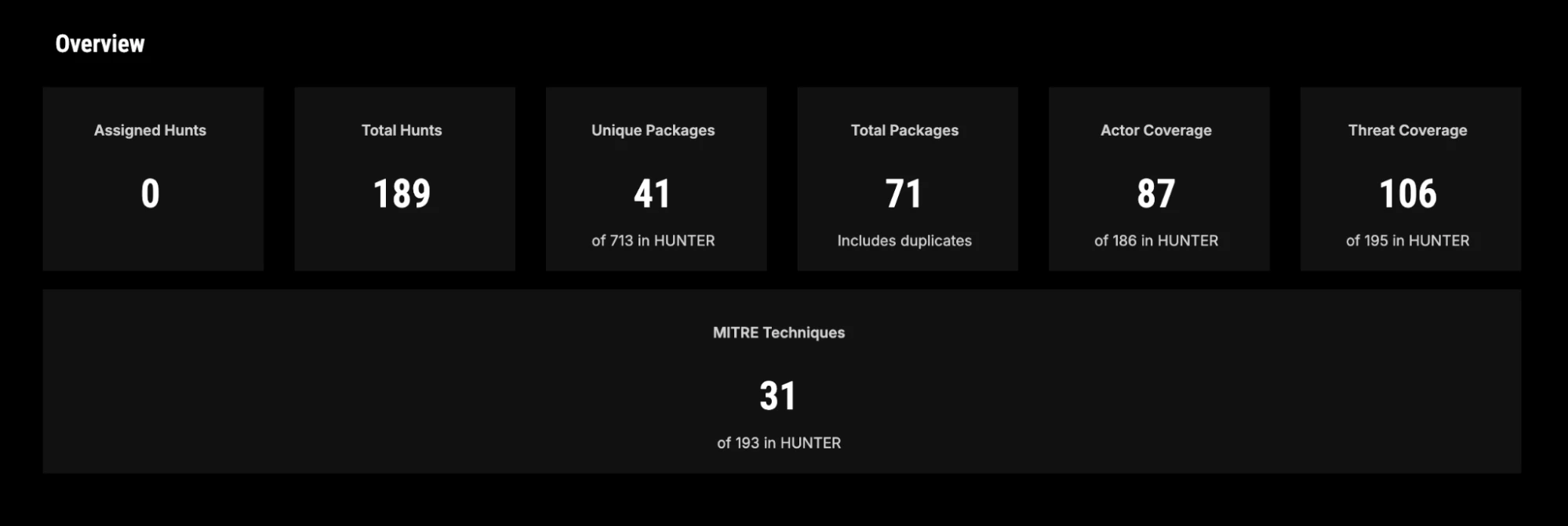

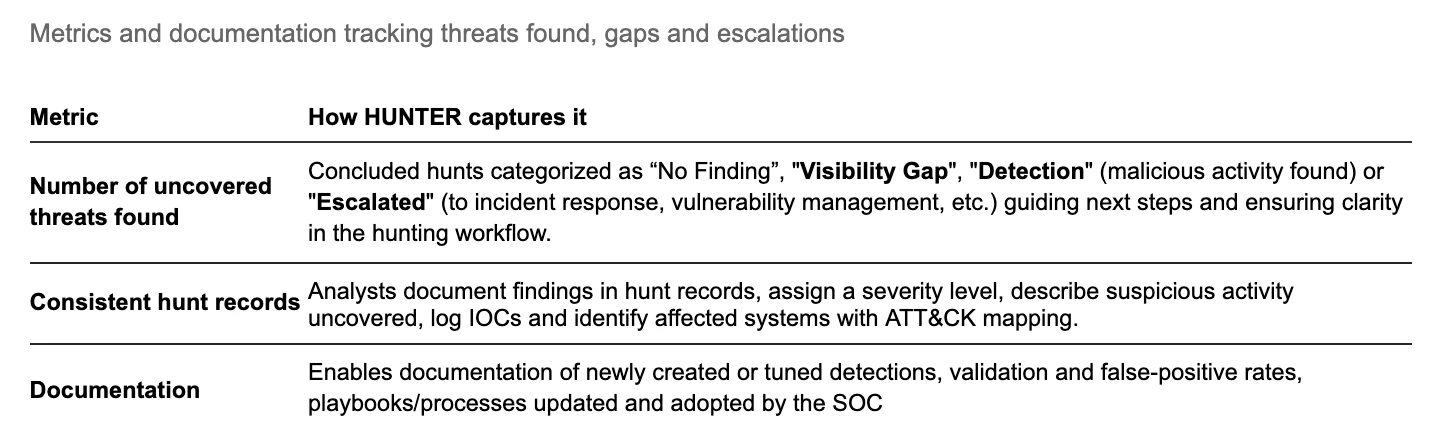

We designed the Intel 471 HUNTER Hunt Management Module to operationalize hunts, make it simple to track good metrics and report outcomes whether threats are found or not. The module also allows teams to build repeatable hunt campaigns against top risk categories and emerging threats, provides guidance on pivoting and filtering during hunts and helps hunt teams coordinate escalation to incident response when threats are found. Using HUNTER to drive more hunts, threat hunting teams can provide more value to to detection engineering and contribute measurable increases in newly created detections

HMM’s auto-generated post-hunt reporting saves time and improves consistency. Manually writing reports after each hunt can take days in summarizing the hunt, detailing findings (undetected threats, misconfigurations, visibility gaps) and explaining their impacts to organizational risk. HMM translates this activity into tactical, operational, and strategic reporting that helps prioritization of future hunts, enhance repeatability and supports the business case for the hunt program.

The module helps hunt programs maximize the impact of the HUNTER hunt package library, which consists of:

- 750+ behavioral hunt packages across ~200 MITRE ATT&CK techniques

- Pre-validated queries in the native query language of over 20 platforms

- ~30 data points of CTI context per package and rich hunt package tagging (by actor, threat, targeting, MITRE IDs) for filtering and hunt campaign building

- Emerging Threat Collection hunt package collections to prioritize new and trending threat activity

- Analyst runbooks to standardize hunt workflows

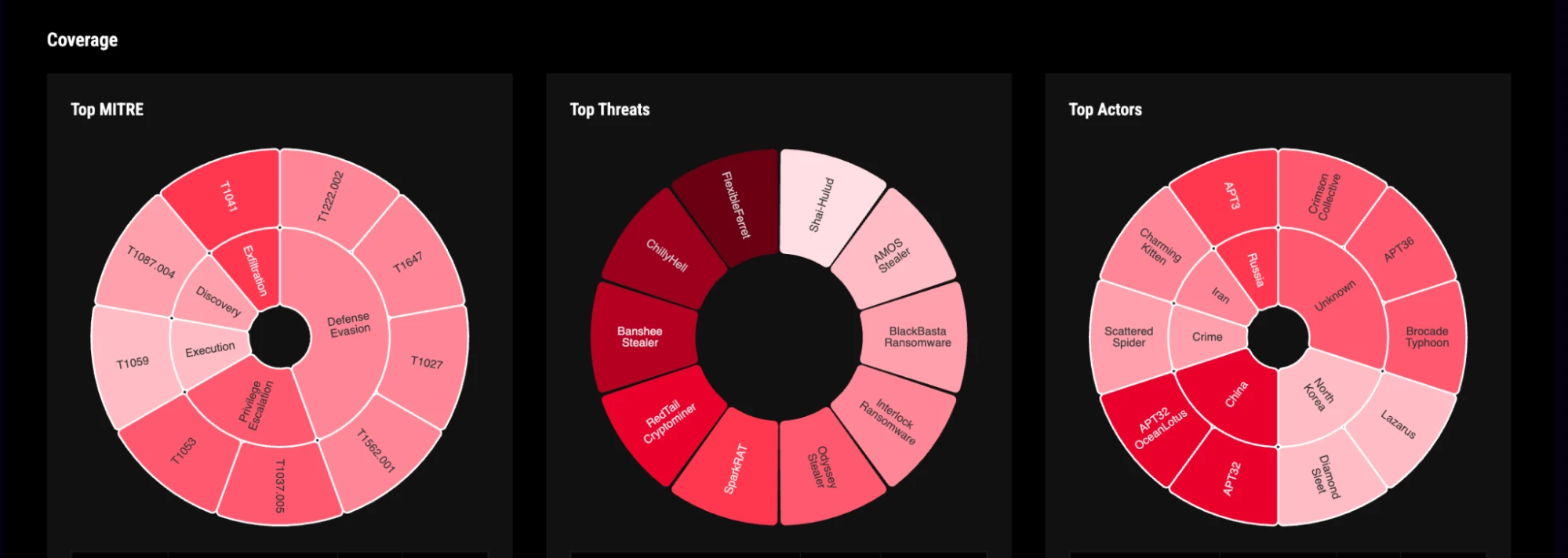

Image: HUNTER Dashboard overview of missions completed, hunt packages used, threat coverage and MITRE mapping.

Image: A visual breakdown showing threats covered by previously run behavioral hunts.

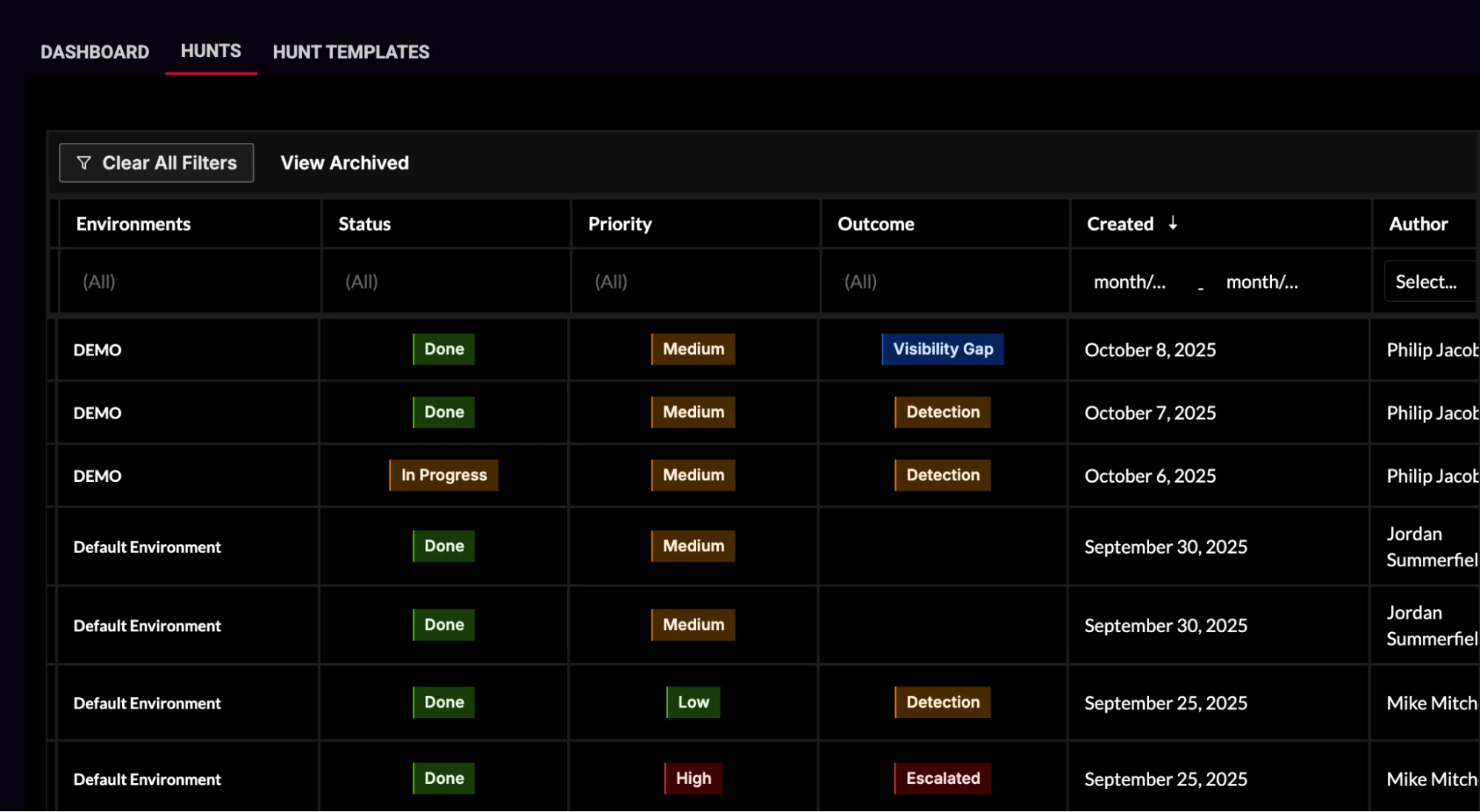

Image: Hunt Management Module: Tracks hunt outcomes by visibility gaps, detections, and escalations

Reporting Efficiency and Outcome-Focused Metrics

HUNTER can auto-generate detailed reports covering the total number of hunts the team has completed, a breakdown of true positives, false positives and no findings, average hunt completion time and coverage against specific MITRE ATT&CK techniques.

The reports can provide the basis for evidence packages to meet audit, insurance and regulatory needs. They also provide valuable insights into team performance, gaps in detection or prevention, and clearly demonstrate value of threat hunting activity. Reports are exportable as editable Word documents or PDFs, with options for executive summaries or full technical details.

Request a HUNTER demo to see your current TTP coverage, identify blind spots and generate exportable reports. If you’re interested in trying HUNTER hunt packages, sign up for a HUNTER Community account today and experience a subset of Intel 471’s intelligence-driven behavioral threat hunting packages on your security platforms.